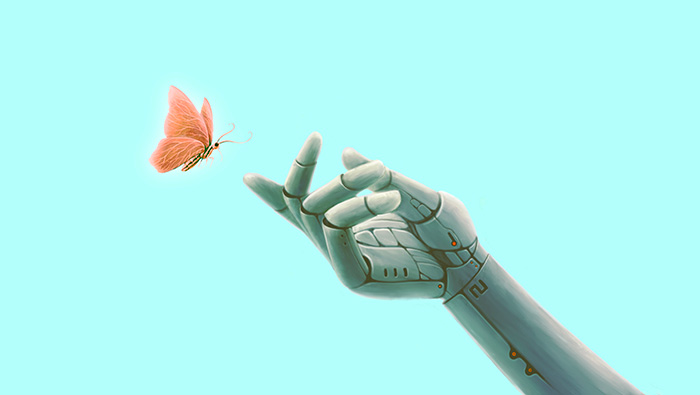

Should I Worry About Artificial Intelligence?

It’s the oldest plot in science fiction: A scientist creates something that can think for itself; the creation does just that, and turns on its creator. Despite this common trope, the term “artificial intelligence,” or AI, is recently everywhere from health care to travel bookings. If you’ve interacted with an automated phone or text message system, you’ve spoken to an AI. It is managing investments, assisting in medical diagnosis and placing ads on your social media. Soon, it could be driving cars and trucks.

So is a machine uprising on the horizon? Not really, said Thomas Strohmer, professor of mathematics and director of the Center for Data Science and Artificial Intelligence Research (CeDAR) at UC Davis.

“Currently there’s no ‘I’ in AI,” Strohmer said. “A true AI is very, very far away.”

But other issues need attention if AI is to bring the widest possible benefits to society, Strohmer said.

CeDAR was established in 2019 to foster groundbreaking multidisciplinary research in data science. The center provides seed funding and acts as a hub for several other data science and AI-related projects on campus, in fundamental research, agricultural sciences, health and medicine, and veterinary medicine.

Big data and algorithms

What is called AI is actually a way to combine data (often very large amounts of data) with algorithms (basically, a set of rules for calculation) to solve problems. In traditional computing, these rules are mostly set in advance (if A happens, do B). Thanks to ever-larger datasets and advances in algorithms and computing power, modern AI algorithms can learn more and more of their rules from the data itself. They are “trained” on existing data. A more accurate, if less eye-catching name is machine learning.

Because of their ability to handle a lot of data, AI/ML systems may see patterns that human minds would miss. But they are learning in a prescribed way, Strohmer said. They are limited by their programming and the data available to them.

“It doesn’t come up with its own ideas,” Strohmer said. AIs have been programmed to write poems or make digital artwork. But no AI could spontaneously decide to paint a sunset or jot down a sonnet.

Trust and accountability

The real issues around AI technology, Strohmer said, are in trust, accountability and responsibility.

“When you use machine learning to solve problems in the real world, you have to realize that not all problems are simply logic problems,” he said. “Human elements need to be incorporated.”

Trustworthy AI starts with reliable data: An algorithm will only be as good as the data on which it is trained. Incomplete, missing or biased data can lead to results that are incorrect, unfair or discriminatory. Companies already collect vast amounts of data on their customers, often with very little oversight.

“We need a robust framework for data privacy,” Strohmer said.

In building an algorithm to work in the real world, you need to be aware of what you don’t know, and that there may be things you are not aware that you don’t know — the unknown unknowns. Predicting how an algorithm will react under different conditions can be hard, Strohmer said. Human beings can improvise in unexpected situations, but machines cannot.

“Things that are obvious to us are not obvious to an algorithm, but we don’t necessarily know what they are,” Strohmer said. “But now we begin to understand this, and we can start building the mathematics for it.”

Algorithms can support human decision-making, but not replace it, Strohmer said. To establish trust, a human being needs to take responsibility for the algorithm.

This area of trustworthiness and accountability in how AI is applied in the real world is an important focus for CeDAR. The center is coordinating research in these areas, as well as on developing and applying AI technology. In winter quarter 2023, Strohmer will teach a new graduate class on “Fairness, Privacy, and Trustworthiness in Machine Learning.”

“We want to get students thinking about these concepts early on in their careers,” he said.